Dispelling Myths Around SGX Malware

SGX-based malware may not be as troublesome as believed. We'll explain why that is and how Symantec is ready to deal with such malware if they were to appear.

A group of security researchers from Graz University of Technology recently disclosed detailed methods of deploying attacks from inside Intel's SGX Security Enclave. The research paper received decent media attention probably due to recently discovered architecture vulnerabilities, such as Meltdown and Spectre. Researchers also released proof of concept (PoC) code for Linux that successfully escapes the securely enclosed environment.

Symantec researchers implemented a similar PoC on the Windows platform. This PoC was then used to prove that protection against such an attack is not only possible but already included in Symantec protection products for many years. Common belief is that it is practically impossible to detect SGX-escaping malware. Later on, we will explain why this claim is misleading and how our protection works.

SGX in a nutshell

SGX, or Software Guard Extension, was designed by Intel to provide a trusted safe environment for critical algorithms used in computer security use cases. The design principle was to place critical trusted code in a safe place where no malicious actor can manipulate it. Such critical code can involve user authentication, checking user and process privileges, or calculating digital signatures. These should be completely separated and protected from malicious actors to ensure full trust.

Intel achieved these goals by providing a secure enclave that can hold and run code. This is not to be confused with the so-called protected mode which has existed in Intel x86 architecture since the 1990s. Protected mode helps separate processes by reducing the risk of inadvertently overwriting data in another process's memory. However, that was not designed to provide protection against malicious actors.

Code in the secure enclave can be trusted because it cannot be altered by other apps or malware. Their states are always treated as known, and their code and data assumed intact at all times. Such code is therefore referred to as a trusted component.

State, code, and data of a normal app, on the other hand, cannot be guaranteed to remain unchanged by a software bus or malicious actors. They are treated as untrusted and called untrusted component. It is worth mentioning that the secure enclave protection is provided in the hardware by the CPU; it is not a software-enforced method provided by the operating system or third-party software. In fact, the operating system is running outside of the secure enclave, therefore it is treated as untrusted components.

Can Intel SGX be considered an ultimate solution for running secure code?

In software, problems always arise in the details of implementation and unknowns not considered during the design phase. It seems Intel's SGX design focused on good intentions and use cases, assuming only trusted actors will use it and therefore malware will only exist as an untrusted component. We can surmise this approach from the code installation process, which is protected by digital signatures and works on the assumption that bad actors cannot obtain signing keys or find other ways to install malicious components into the secure enclave.

Intel requires that the secure enclave code be signed by a trusted digital signature, therefore no bad guys will ever be able to install their code in there, but this approach offers little protection in reality.

According to Graz University of Technology's research, "Intel does not inspect and sign individual enclaves but rather white-lists signature keys to be used at the discretion of enclave developers for signing arbitrary enclaves." Researchers also said "a student […] independently of us found that it is easy to go through Intel’s process to obtain such signing keys" and that "the flexible launch control feature of SGXv2 allows bypassing Intel as intermediary in the enclave launch process." Malware can also infect a software developer's machine, injecting their code right into the supply chain of software products. This has been done before, as in the case of Avast CCleaner.

Digital signatures can also be overcome by brute force as these algorithms' protections are based on the limits of current computing power and technology. People often underestimate the amazing progress in computing power of new hardware, and of course the virtually unlimited power of distributed systems. The collective power of millions of computers can be hijacked by an attacker leveraging their resources for malicious activities. Through such a distributed system, an attacker could find hash collisions and in theory break the signature checks.

Intel requires that the secure enclave code be signed by a trusted digital signature, therefore no bad guys will ever be able to install their code in there, but this approach offers little protection in reality.

What happens when malicious code gets into the secure enclave?

Based on what we have discussed so far, we can assume that malicious code can—and ultimately will—be installed into the secure enclave.

At first, we might think that is okay. That's because the enclave creates a restricted environment where the code does not have normal user space and cannot run code from outside the enclave. The malicious trusted component is unable to use any operating system APIs. That means it cannot create files, write into the registry, send data over the network, or download files from the internet. Any malware residing in the secure enclave is highly limited in what it can do.

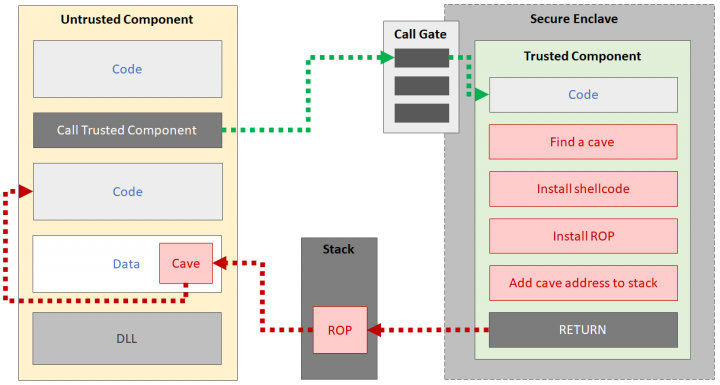

However, SGX shares portions of the untrusted component memory space. This setup provides an attack vector for malicious code. Malicious code can use known techniques to find caves—unused memory spaces—to inject code into the memory of the untrusted component. Because an untrusted component is just a normal app with all the benefits provided by Windows, injected code shares the very same privileges and runtime environment.

All the malicious trusted component needs to do is to place its own code within a cave in the executable memory space and redirect the code execution path to the cave. That can be achieved by a return-oriented programming (ROP) technique. ROP code lives on the stack as a series of addresses which will be used as return addresses. This code will be executed in sequence by calling API functions provided by the operating system and other well-known software libraries. When a trusted component returns from execution, the SGX will switch the CPU state to normal and restore the runtime environment so that the untrusted component can continue its execution. Now the return address has already been manipulated by the malware and the ROP code will perform malicious activities, including marking the code inside the cave executable and running it.

This technique is simple enough for any experienced exploit developer (good or bad actor). The target app does not even need to have vulnerabilities to be exploited.

Could SGX enable super malware?

We have discussed how code running inside the secure enclave cannot be manipulated and can't even be read by other processes for scanning by security software. Remember, this is by design to protect against malicious intent. One might think this could allow for a new breed of super malware which cannot be detected in memory, and therefore cannot be stopped. Wrong!

To be effective, the bad code still needs to break out of the enclave using exploitation techniques we previously discussed. And that is where modern computer security solutions like the ones from Symantec come in handy. The technique used to break out of the secure enclave is a clear malicious behavior that can be easily detected by our existing technologies. The malicious code might reside inside the enclave, but it cannot get out from there—it is effectively jailed for life. It might be able to get in, but it will be blocked every time it tries to get out. It's like the plot to the movie Law Abiding Citizen, where the criminal protagonist wants to be in prison so that he can commit perfect crimes—but we change the story so that he cannot break out of his cell. The rest of the movie is about how he gets caught every single time he tries to escape, and that's how it is for malware inside the SGX enclave—trapped.

When the research was initially released, Intel reiterated SGX's value is to execute code in a protected enclave, but it does not guarantee the code executed in the said enclave comes from a trusted source. In all cases, Intel recommends utilizing programs, files, apps, and plugins from trusted sources.

As part of the Symantec protection stack, Memory Exploit Mitigation (MEM) actively monitors for exploit techniques including ROP. Upon detecting a behavior that looks like an exploitation attempt, MEM springs into action. MEM enables products like Norton Security and Symantec Endpoint Protection (SEP) to immediately stop these attacks before further system compromise occurs.

We encourage you to share your thoughts on your favorite social platform.