Synopsis: As more and more HR functions start to make use of chatbots to answer queries HR teams have to address a problem that didn’t used to exist: what happens when someone is mean to something that isn’t conscious?

Yesterday I was chatting to an HRD about a possible change in their structure and approach. It’s a bit of my job that I really enjoy. And we were talking about their adoption of chatbots for volume queries and how that was changing the shape of what people need from the function.

Then she said “And you should see the things someone is writing to it. I think they think they are dating it…”

Which got me thinking about to what extent we police/investigate/worry about actions that have no impact on the organisation (beyond the waste of time to type nonsense to a chatbot). Actions that might ‘speak to character’ but otherwise do no damage. You can’t hurt the feelings of a chatbot and you can’t harass it as it can’t feel distress. But you can be disturbingly odd.

I’m reading Everybody Lies by Seth Stephens-Davidowitz and it is fascinating. He uses Google search terms to get a more honest picture of the world than is available through asking people in polls or surveys. He uses this to address a wide range of questions from ‘Was Freud right about us fancying our parents?’ (apparently) to ‘What percentage of the US population is likely to be gay?’ (he estimates it as twice the ‘official’ figure – which is food for thought).

People reveal more of themselves online than if asked elsewhere – if only because of ‘social desirability bias’, our desire to give answers that we feel we’d like to associate with our character or for other people to. But on Google we have an incentive to reveal more of ourselves: we get information in return. Maybe it’s the same with chatbots.

So here are some things I’ve been thinking about.

- A common search query for Alexa is to ask what ‘she is wearing?’. If someone asked your chatbot that would you be concerned? Would you take action?

- Should you ethically even be looking at queries that people might think are anonymous? How clearly would we need to label the nature of the interactions?

- If someone threatened violence against a chatbot would you consider that worrying enough to intervene?

- If someone asked for information about a human colleague through the chatbot in a sinister/odd way would that be enough to act? ‘Can you give me the home address for the Amy who is sexy and sits in procurement?’

- Would your employment policies currently cover aggression towards anything other than a human or damage to property? Would you even know without checking? There’s a recent example of complexity in this area with a suggestion that AI should be able to own a patent. Is it misuse of IT equipment?

- Do you not have enough stuff that real people are doing/not doing with other people to be getting on with?

- Could chatbot enquires reveal anything about organisational culture?

- Will people ask (more regularly) questions that they sometimes feel worried about asking HR? For instance ‘What was my notice period again?’.

Anyway, just some thoughts. I’m off to be nice to Alexa.

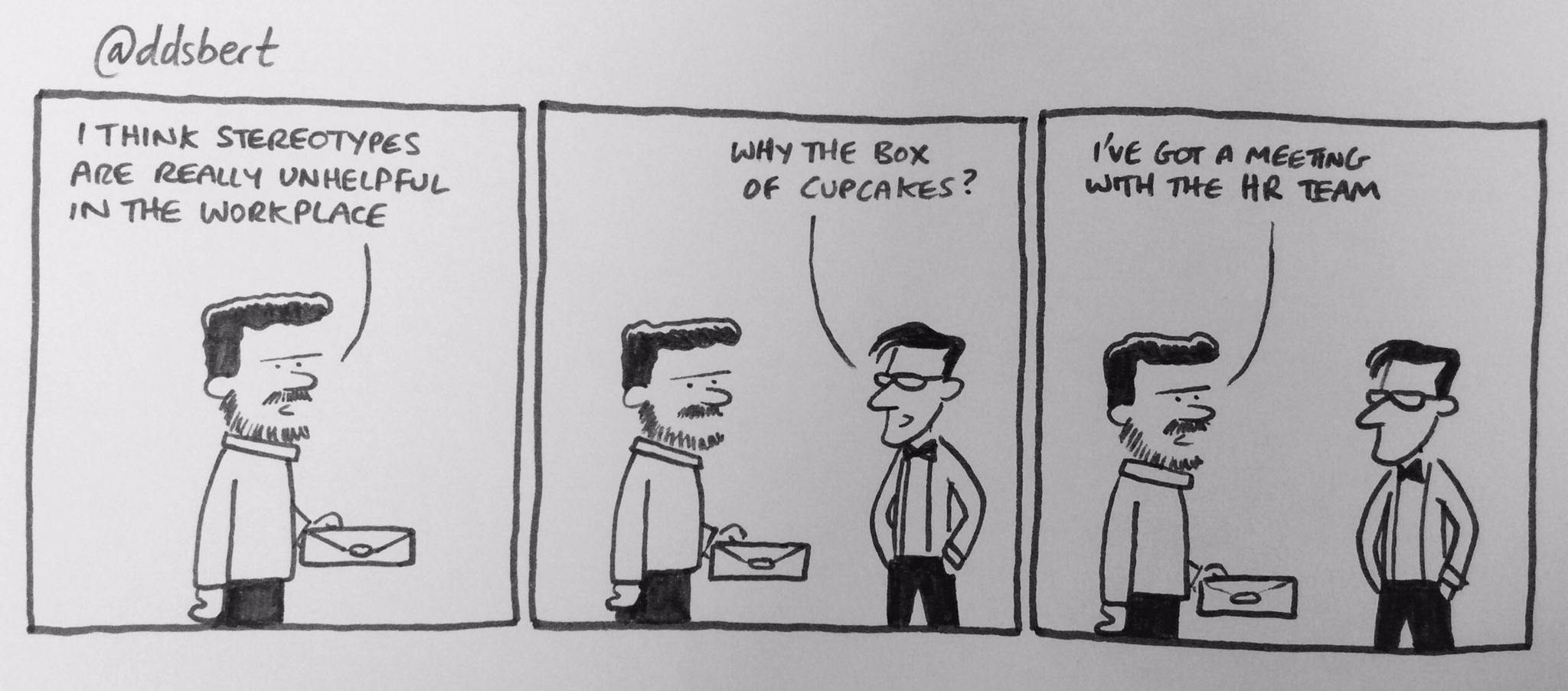

This isn’t what a chatbot looks like, but I had very few photos available to me in the WordPress free section… Rob McCargow will love it.